The footage

I had to make sure that I own the copyright of the example clip and that nobodies privacy is violated. So I decided to make a short video featuring a toilet toy I bought in Tokyo in 2003. A friend of mine went to the shop a few years later, but it was already sold out.The equipment

My camera is a simple mini-DV one. It's only SD but since the gmerlin architecture is nicely scalable, the same encoder settings (except the picture size) should apply for HD as well. I connected the camera via firewire and recorded directly to the PC (no tape involved) with Kino.

Capture format

The camera sends DV frames (with encapsulated audio) via firewire to the PC. This format is called raw DV (extension .dv). The Kino user can choose whether to wrap the DV frames into AVI or Quicktime or export them raw. Since the raw DV format is completely self-contained, it was choosen as input format for Gmerlin-transcoder. Wrapping DV into another container makes only sense for toolchains, which cannot handle raw DV.Quality considerations

My theory is that the crappy quality of many web-video services is partly due to financial considerations of the service providers (crappy files need less space on the server and less bandwidth for transmission), but partly also due to people making mistakes when preparing their videos. Here are some things, which should be kept in mind:1. You never do the final compression

In forums you often see people asking: How can I convert to flv for upload on youtube? The answer is: Don't do it. Even if you do it, it's unlikely that the server will take your video as it is. Many video services are known to use ffmpeg for importing the uploaded files, which can read much more than just flv. Install ffmpeg to check if it can read your files.

Compression parameters should be optimized for invisible artifacts in the file you upload. That's because in the final compression (out of your control) will add more artifacts. And 2nd generation artifacts look even more ugly, the results can be seen on many places in the web.

2. Minimize additional conversions on the server

If you scale your video to the same size it will have on the server, chances are good that the server won't rescale it. The advantage is that scaling will happen for the raw material, resulting in minimal quality loss. Scaled video looks ugly if the original has compression artifacts, which would be the case if you let the server scale.

3. Don't forget to deinterlace

Interlaced video compressed in progressive mode looks extraordinarily ugly. Even more disappointing is that many people apparently forget to deinterlace. Even the crappiest deinterlacer is better than nothing.

4. Minimize artifacts by prefiltering

If, for whatever reason, artifacts are unavoidable you can minimize them by doing a slight blurring of the source material. Usually this shouldn't be necessary.

Format conversion

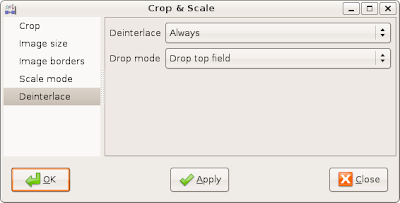

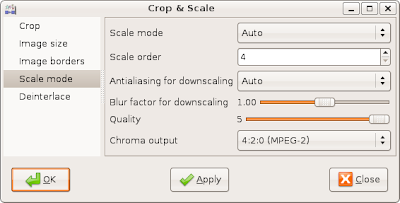

All video format conversions can be done in a single pass by the Crop & Scale filter. This gives maximum speed, smallest rounding errors and smallest blurring.Deinterlacing

Sophisticated deinterlacing algorithms are only meaningful if the vertical resolution should be preserved. In our case, where the image is scaled down anyway, it's better to let the scaler deinterlace. Doing scaling and deinterlacing in one step also decreases the overall blurring of the image.

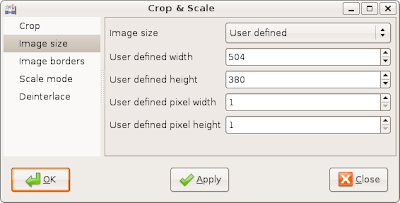

Scaling

Image size for Vimeo in SD seems to be 504x380. It's the size of their flash widget and also the size of the .flv video. Square pixels are assumed.

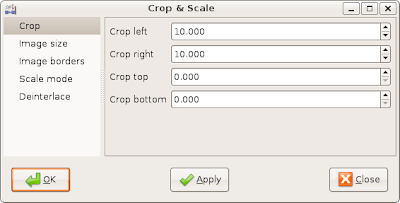

Cropping

The aspect ratio of PAL DV is a bit larger than 4:3. Also 504x380 with square pixels is not exactly 4:3. Experiments have shown, cropping by 10 pixels each on the left and right borders removed black border at the top and bottom. If your source material has a different size, these values will be different as well.

Chroma placement

Chroma placement for PAL DV is different from H.264 (which has the same chroma placement as MPEG-2). Depending on the gavl quality settings, this fact is either ignored or a another video scaler is used for shifting the chroma locations later on. I thought that could be done smarter.

Since the gavl video scaler can do many things at the same time (it already does deinterlacing, cropping and scaling) it can also do chroma placement correction. For this, I made the chroma output format of the Crop & scale filter configurable. If you set this to the format of the final output, subsequent scaling operations are avoided.

Since ffmpeg doesn't care about chroma placement it's probably unnecessary that we do. On the other hand, our method has zero overhead and does practically no harm.

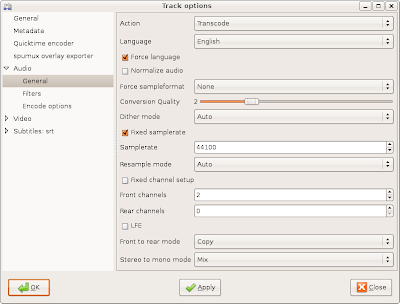

Audio

Vimeo wants audio to be sampled at 44,1 kHz, most cameras record in 48 kHz. The following settings take care for that:

Encoding

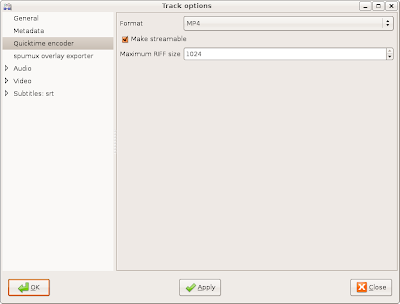

The codecs are H.264 for video and AAC for audio. Not only because they are recommended by vimeo, they give indeed the best results for a given bitrate.For some reason, vimeo doesn't accept the AAC streams in Quicktime files created by libquicktime. Apple Quicktime, mplayer and ffmpeg accept them and I found lots of forum posts describing exactly the same problem. So I believe that this is a vimeo problem.

The solution I found is simple: Use mp4 instead of mov. People think mp4 and mov are indentical, but that's not true. At least in this case it makes a difference. The compressed streams are, however, the same for both formats.

Format

The make streamable option is probably unnecessary, but I allow people to download the original .mp4 file and maybe they want to watch it while downloading.

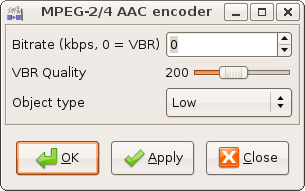

Audio codec

The default quality is 100, I increased that to 200. Hopefully this isn't the reason vimeo rejects the audio when in mov. The Object type should be Low (low complexity). Some decoders cannot decode anything else.

Video codec

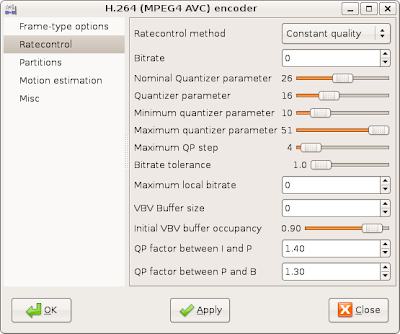

I decreased the maximum GOP size to 30 as recommended by Vimeo. B-frames still screw up some decoders, so I didn't enable them. All other settings are default.

I encode with constant quality. In quicktime, there is no difference between CBR and VBR video, so the decoder won't notice. Constant quality also has the advantage that this setting is independent from the image size. The quantizer parameter was decreased from 26 to 16 to increase quality. It could be decreased further.

Bugs

The following bugs were fixed during that process:- Reading raw DV files was completely broken. I broke it when I implemented DVCPROHD support last summer.

- Chroma placement for H.264 is the same as for MPEG-2. This is now handled correctly by libquicktime and gmerlin-avdecoder.

- Blending of text subtitles onto video frames in the transcoder was broken as well. It's needed for the advertisement banner at the end.

- Gmerlin-avdecoder always signalled the existance of timecodes for raw DV. This is ok if the footage comes from a tape, but when recording on the fly my camera produces no timecodes. This resulted in a Quicktime file with a timecode track, but without timecodes. Gmerlin-avdecoder was modified to tell about timecodes only if the first frame actually contains a timecode.

- For making the screenshots, I called

LANG="C" gmerlin_transcoder

This switched the GUI to English, except the items belonging to libquicktime. I found, that libquicktime translated the strings way to early (the German strings were saved gmerlin plugin registry). I made a change to libquicktime so that the strings are only translated for the GUI widget. Internally they are always English.